If you are an internet marketer or someone who is entrusted with the task of improving the traffic of your site – you must be using the Google Search Console. In Fact, this is what you would employ as a must use the tool. It lets you know of any issues or error messages you may be facing. We would be discussing one of the error messages that you may come across. The discussion we will be handling today is the error that most of you may be encountering in your search for the traffic ratings of your site. How to resolve “Indexed, though blocked by robots.txt” error that you may be facing? We will let you know how?

How to resolve “Resolve Indexed, though blocked by robots.txt”?

It can be one of the fatal errors that you may be facing. In fact, this error can cause a page that you do not want to index. The best way to get rid of the issue is to deindex the page.

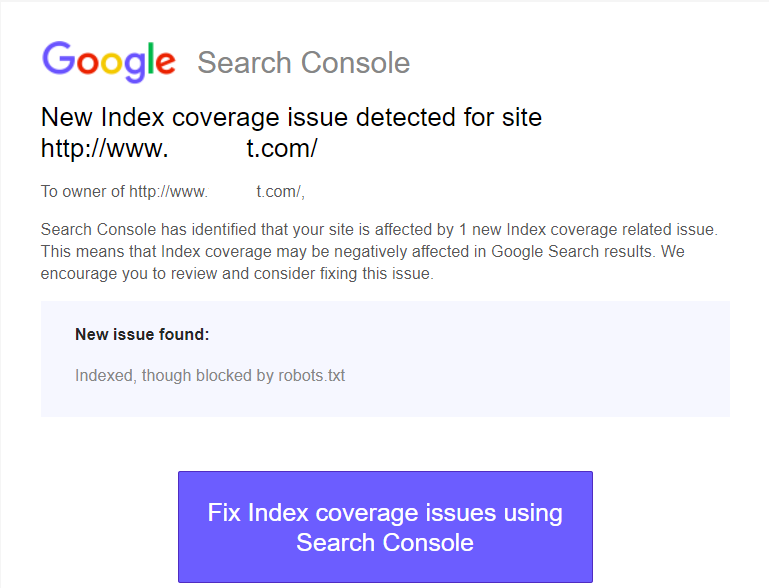

However, this is a standard error that you may come across while using your Google Search Console while checking for the issues you may be facing concerning the traffic of your site. What is Google Search Console? Well, it would be a good idea to understand about the Google Search Console and get to know about it before moving on to find information about the error and the solutions thereof.

Google Search Console – What It is?

Well, anyone who has been involved with things related to website traffic should be well aware of utility from Google. It is a free application that would help you identify and check the issues that the search engine may be facing while indexing your pages.

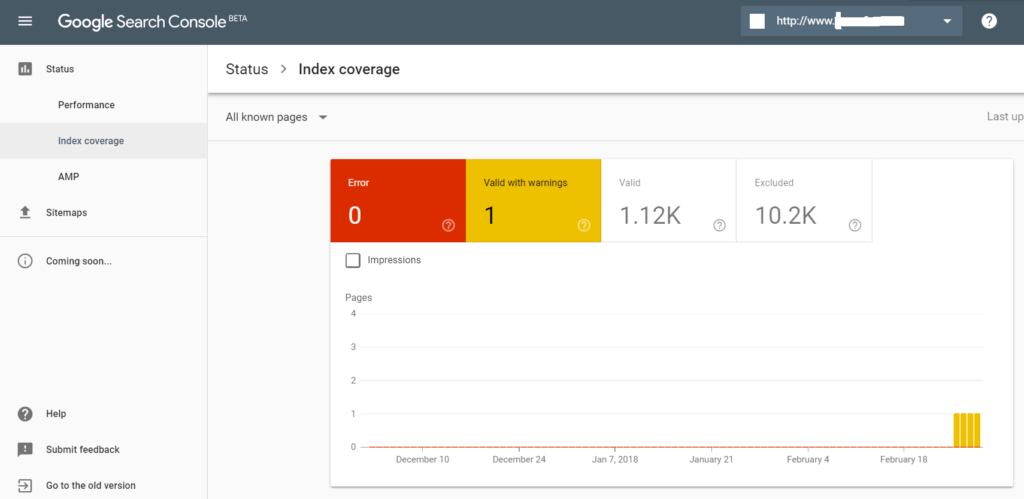

You should be aware that Google crawls and attempts to index your pages and website so that it can be shown in the search results. Google Search Console would let you identify, troubleshoot and resolve the errors associated with the issues that Google comes across while indexing your pages. Get all the information on which pages are ranking well, and which ones are being ignored by Google.

One of the features that this tool offers you is a detailed Index Coverage report. This page will let you know of the pages on your website that Google tried to crawl and index. It also allows you to understand the reasons and issues that it may have come across while indexing the pages. Some of these error messages may leave you spellbound. If you are not a technical person yourself, these reports can be quite confusing.

One such error message you may come across can be what we have been discussing here – “Indexed, though blocked by robots.txt “What does this error mean and how to resolve it? Read on to know more.

“Resolve Indexed, though blocked by robots.txt” – How To Fix?

This is a warning rather than being an error. It may not be as severe as a few other errors you may be facing on Google Search Console. Even then, they need to be attended to.

Please note that you may need to address the issue – though not urgently. It would be up to Google to decide whether your page would be added to the index or not. But, taking care of this error would increase the chances of getting your page indexed.

What does this warning indicate? If you are worried about the indexing, you need not worry. The notice itself shows that your page has been indexed. However, your page was blocked by the robots.txt. The robots.txt file is what acts as a source of inspection for your pages (or for that matter, any page). It would allow a few crawlers to go through your site, while it will block others. Check the settings of your robots.txt file and find for yourself whether you can allow the crawlers from the domain itself or on a page by page basis.

The robots.txt file may be interpreted differently by the different crawlers. Please note that the robots file is just a directive and it may not be able to enforce itself on the crawlers.

Is There a Fix Available?

Well, it depends upon the robots.txt file of your site. It could be an overenthusiasm on your part, and you might have blocked all the crawlers – including the legal ones like Google – in an attempt to prevent a potentially dangerous bot.

Make sure whether you want to index the page or not. The page was blocked from being indexed is a clear indication of the fact that you do not want it to index it through your robots.txt file. Check the URL and if you want it to continue being indexed, remove it from your robots file.

In case, you do not want the page to be indexed; then the best option is to de-index the page. The best option to de-index would be to use a no index tag. Here is how you can use the no index tag for your page. It is quite simple, and you only need to add this tag in the <head> section of your page.

<meta name=”robots” content=”noindex”>

If you want to prevent Google crawlers alone, use the following tag –

<meta name=”Googlebot” content=”noindex”>

It should be noted that the no index tag may be interpreted differently by some crawlers. It could be possible to find the page being indexed even when you de-index it. If you are sure the page can be indexed without issues, you can remove the page from your robots file, so that your page will be indexed and you will not get the error message about the robots.txt.

Parting Thoughts

Well, that is all we have concerning the error (oh sorry, the warning message – instead) message “Resolve Indexed, though blocked by robots.txt” We assume you have understood the reasons for getting this warning message and ways through which it can be solved. You can either choose to deindex the page or remove the page from the robots file if you have no issues if it is indexed.

If still in doubts, you may share your queries through the comments section here. We would either respond to your question or guide you to the right resources.